Road Scene Segmentation

In this project, road scene segmentation was implemented on multiple levels using Python. To begin, lane line detection was implemented using OpenCV image processing, allowing for the determination of the expected path of the vehicle. The system was then expanded to include object detection through the incorporation of a pre-trained neural network, making it more robust to unexpected obstacles such as pedestrians or other cars.

In this project, road scene segmentation was implemented on multiple levels using Python. To begin, lane line detection was implemented using OpenCV image processing, allowing for the determination of the expected path of the vehicle. The system was then expanded to include object detection through the incorporation of a pre-trained neural network, making it more robust to unexpected obstacles such as pedestrians or other cars.

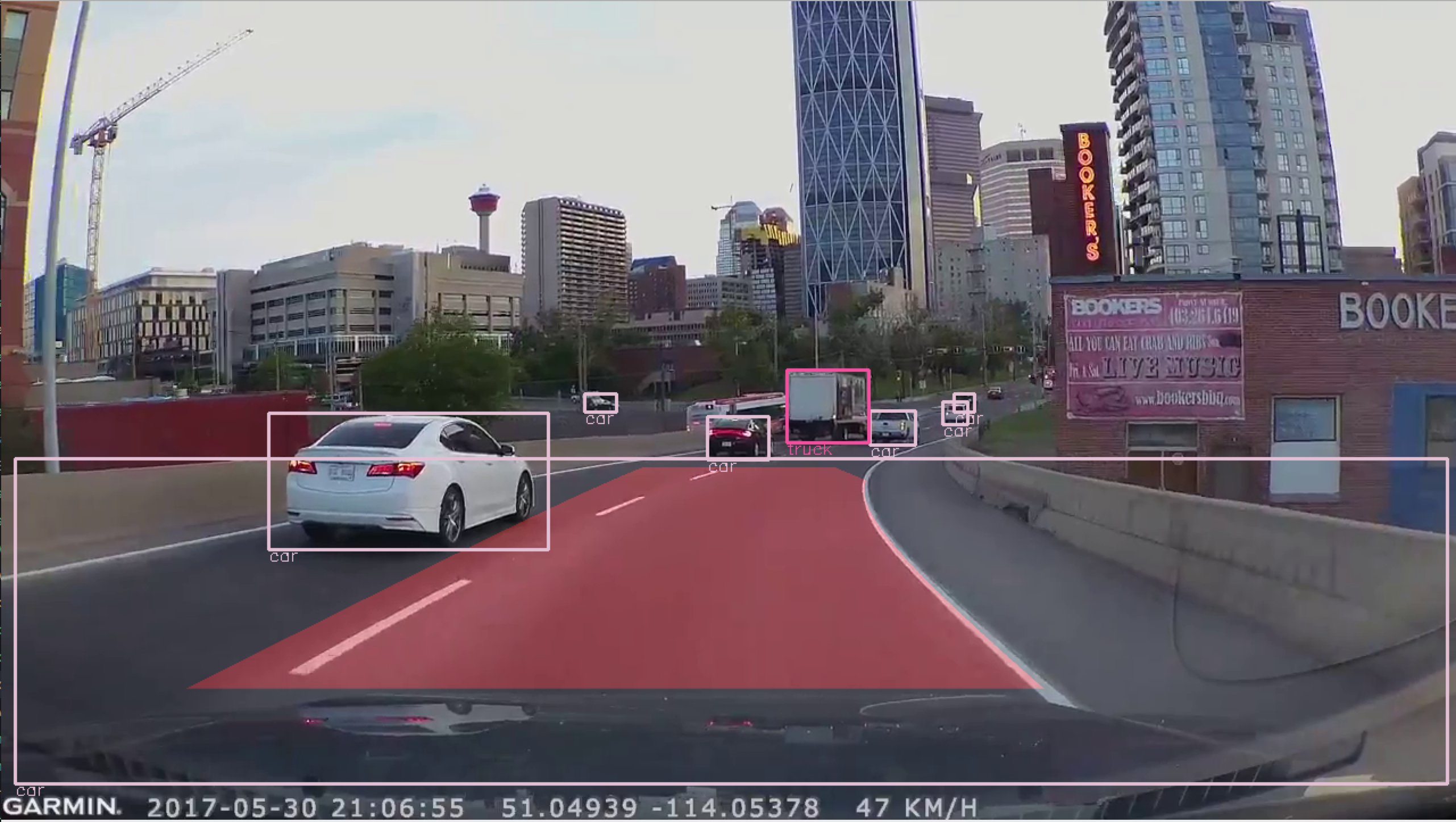

Lane Detection

The end goal of this stage was to be able to produce an overlay on the image indicating the drivable area so that an autonomous vehicle would be able to continue moving along a given path. To achieve this goal, four main image processing steps were implemented: perspective transformation, preprocessing, search, and overlay production.

The image was first transformed to a bird’s eye view to make processing easier using warpPerspective from OpenCV. The image was then viewed in various color spaces using the cvtColor function to filter out irrelevant features. The image was transformed first from the typical RGB color space into the LAB color space: L represents the lightness while A and B represent the green-red and blue-yellow components of the image. It was found that the B channel of the LAB color space was particularly useful for identifying the blue-yellow components of the lane markers. The image was then transformed to the LUV color space where the lightness component was used to highlight the bright white of the lane markers. The two channels – the B channel of the LAB space and the L channel of the LUV space – were added together and a threshold was applied so that only the lane lines would appear in the resulting image.

A sliding window search then assigned pixels to either the left or right lane so a best fit line could be calculated for each one. To get the final overlay, the polygon formed by the best fit lines was transformed back to the original perspective.

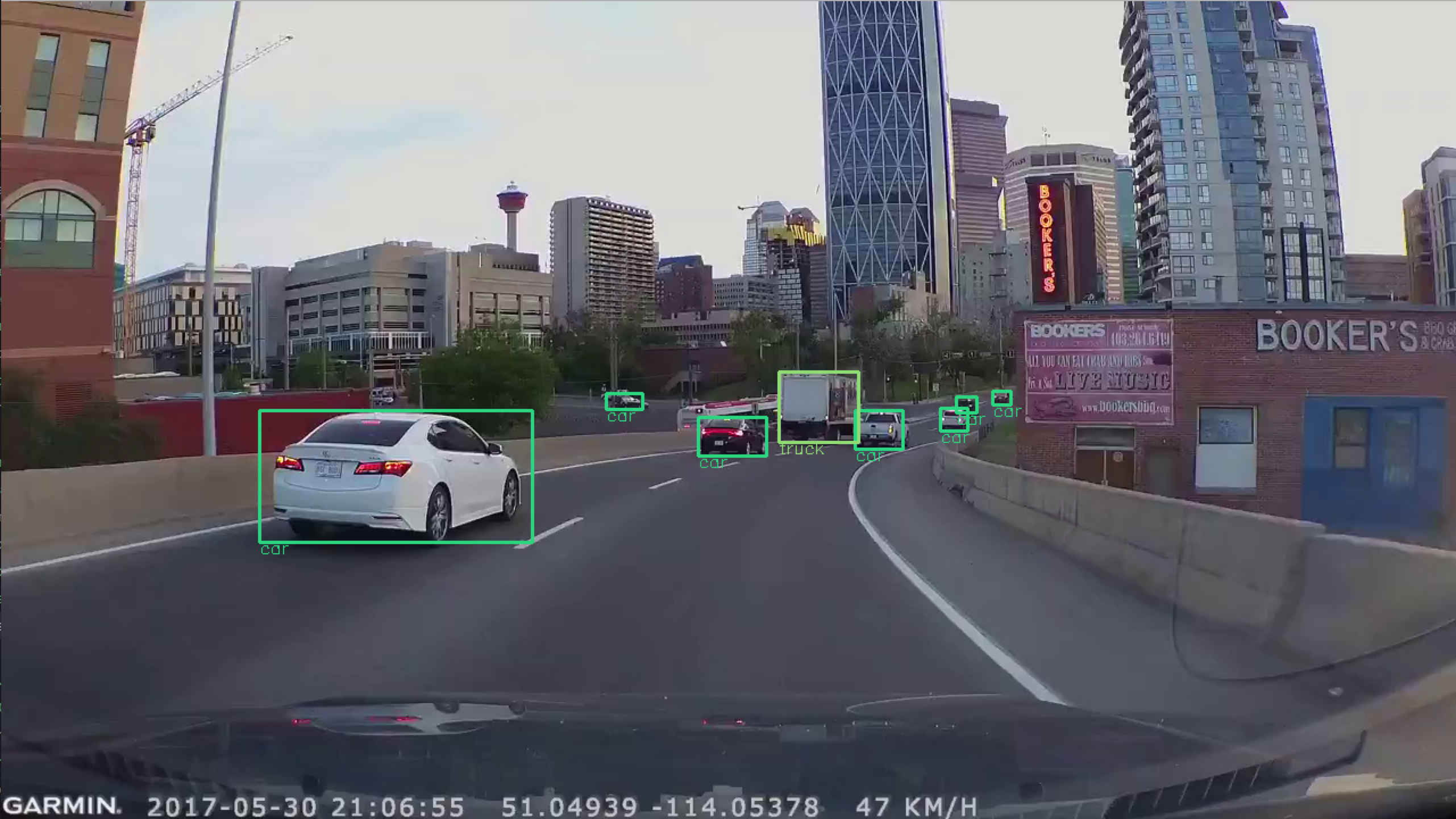

Object Detection

Object detection was added to the processing pipeline to enable the system to detect any obstacles that may appear while driving. To achieve this, the YOLOv3 neural network – built on the open source Darknet framework – was added to the project. The framework provides most functions for using the network to classify parts of the image, but was modified slightly to allow processing a continuous stream of images.

Additionally, a visualization function was added in Python to create output bounding boxes.