Open Source Iris PAD

As my senior thesis at the University of Notre Dame, I created the first open source presentation attack detection (PAD) solution to distinguish between authentic irises and irises with textured contact lenses. The software provides a baseline for researchers to compare against and build on when proposing novel solutions to PAD in iris biometrics. It uses only open source resources such as OpenCV and there are C++ and Python versions available

A condensed version of my thesis was released as a paper, currently available here on Arxiv.

Underlying Method

Feature Extraction

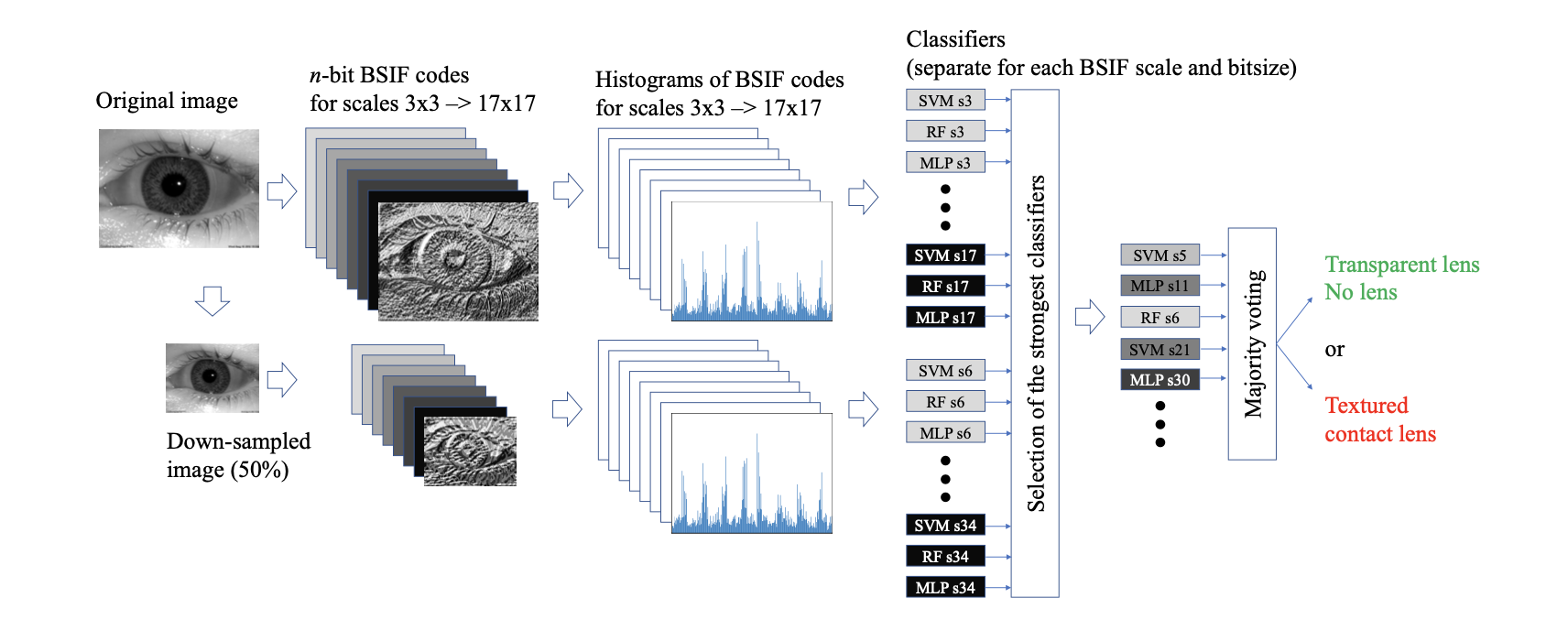

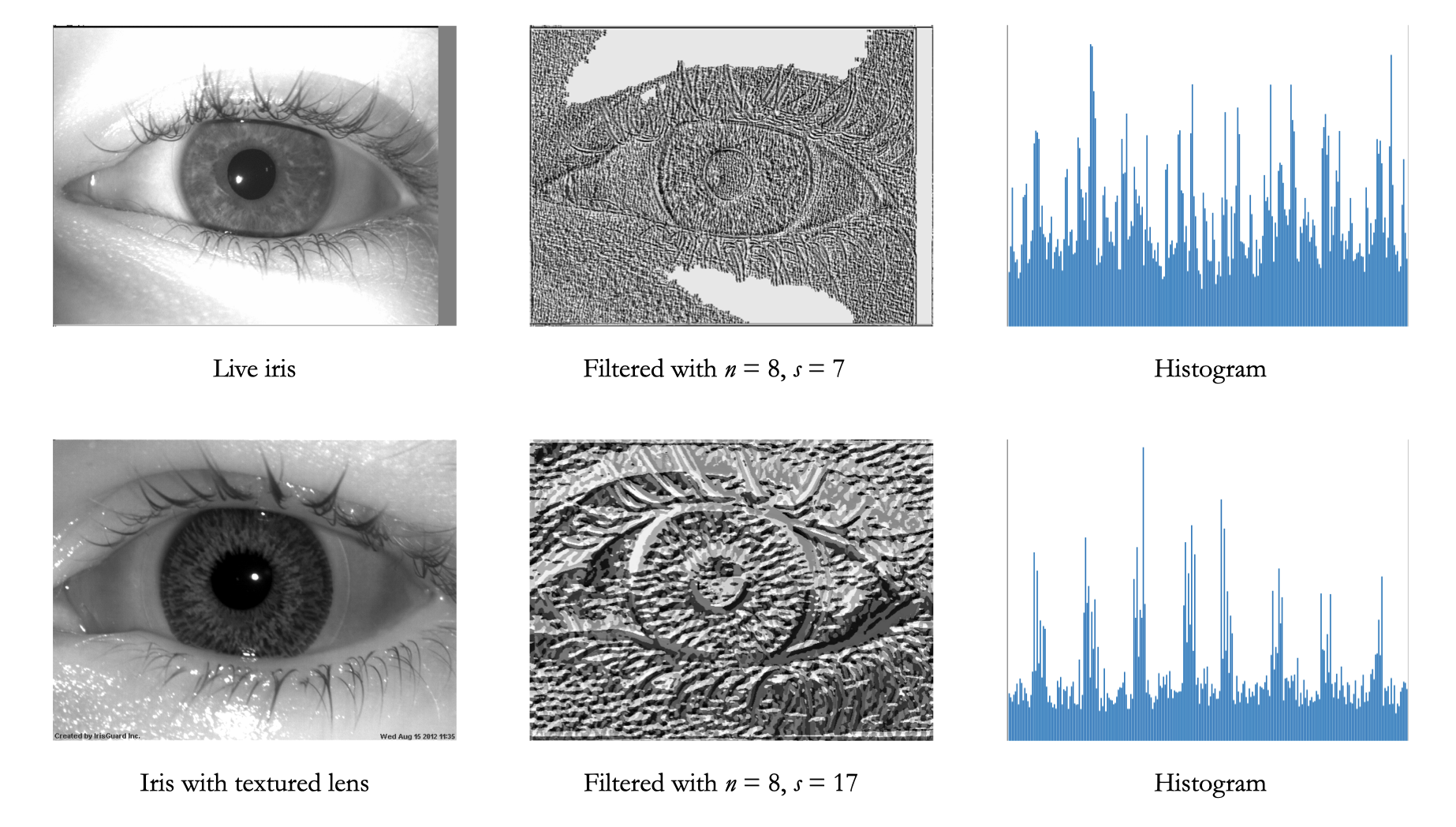

The feature extraction in the method is based on Binary Statistical Image Features (BSIF), which is a recent and successful method for iris PAD. In this method, an image is filtered with a set of n filters of size s x s whose responses are binarized with a threshold at 0. At each pixel, we therefore have an n bit binary number where each bit is the result of one of the filters at that pixel. A histogram with 2n buckets is then taken over the n bit numbers from the entire image.

Feature extraction was enabled for both the original image size and a downsampled version of the image, which effectively doubled the filter size s.

Models

Three different model types, the implementations of which are provided by OpenCV, are available: support vector machine (svm), random forest, and multilayer perceptron (mlp). These classifiers can be used individually or as an ensemble using majority voting (the result with the most supporting models will be the result output by the software).

In general, not all models should be used in the ensemble: the final ensemble is selected by comparing the performance of the individual models on a validation set.

Software

The softare has been released with C++ and Python versions. C++ was originally used for performance reasons and compatibility with OSIRIS, which would allow incorporation of automated image segmentation in the future. A Python version was also created to support ease of understanding, as a major goal of the project was to allow for rapid prototyping of novel solutions.

Two main libraries were used to support the solution: OpenCV for image operations and machine learning model implementations and HDF5 for the feature storage format. OpenCV is well established in computer vision and is considered state of the art in open source computer vision software. HDF5 was chosen to store the feature sets due to its speed and precision.

The software provides an implementation of the method described above that can serve as a baseline for future development as the field of iris PAD advances.

Results

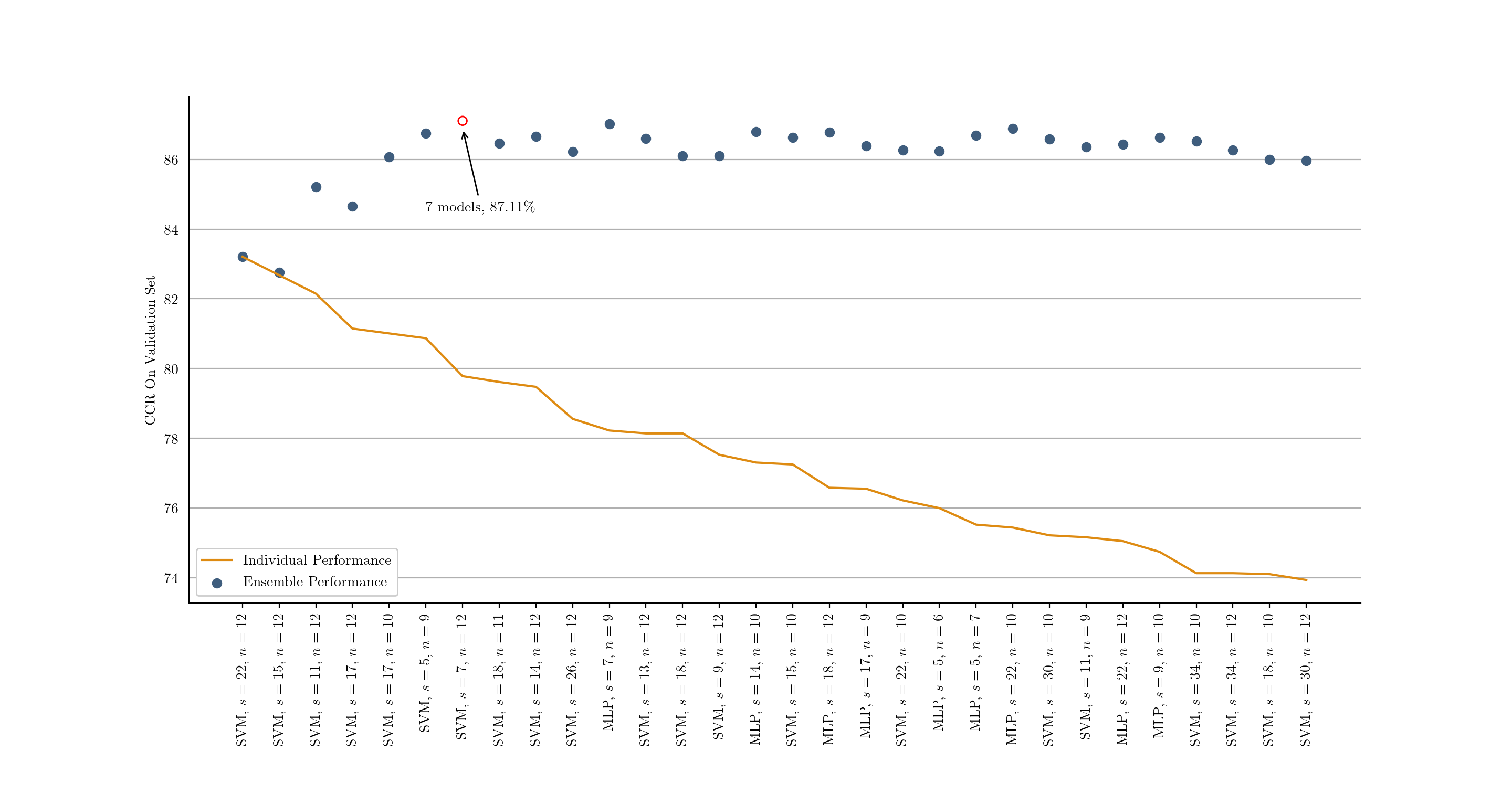

An additional portion of this project was to extend the tests done in the original paper to observe the cross-dataset performance of this method. A set of models was first trained on the Notre Dame dataset, resulting in 360 models (3 model types times 120 different feature combinations). Two additional datasets – Clarkson and IIITD – were used as either the validation or testing set.

Validation

Validation was used to select which models to use as the ensemble. The 360 models were first tested individually on the validation set (Clarkson or IIITD) and ranked in order of performance (correct classification rate). These models were then added one-by-one to an ensemble that was then again tested on the validation set. The ensemble that produced the highest correct classification rate on the validation set was chosen as the ensemble to use for testing.

Testing

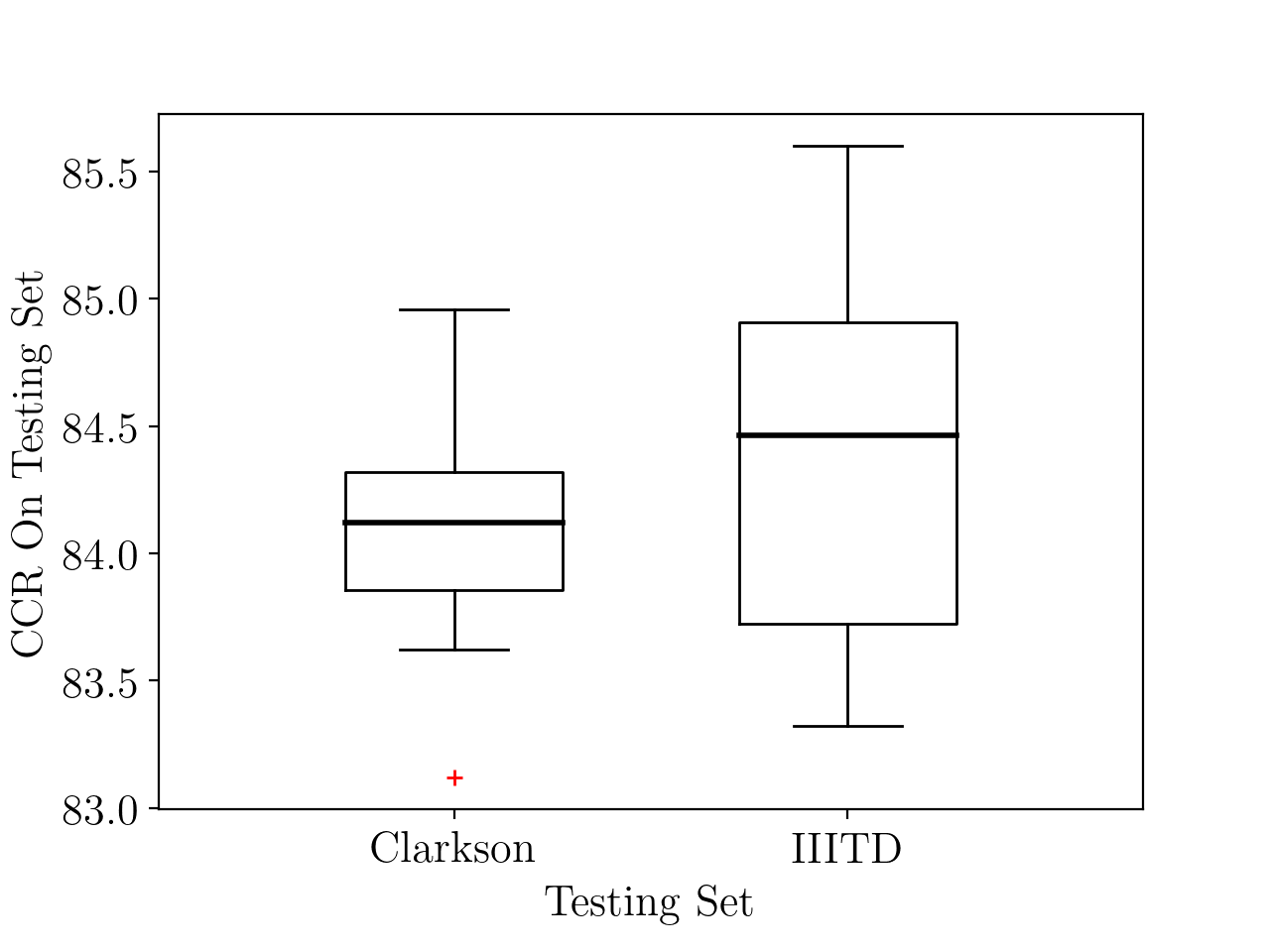

The ensemble selected during validation was used to classify the dataset that was not used for validation – Clarkson or IIITD. The test was performed 10 times on a randomly selected subset of the test set in order to estimate the variance of the correct classification rate. The ensembles selected produced a correct classification rate of around 84% for both the Clarkson and IIITD datasets. This is on par with the winner of LiveDet-Iris 2017 competition (90% on Clarkson and 83% on IIITD), although this comparison cannot be direct since the LiveDet competition also includes paper printouts of irises, which were not considered here.